Are you tired of encountering cryptic symbols and garbled text when you browse the web or work with digital documents? The world of character encoding, while often invisible, is the very foundation upon which we build our digital world, and understanding it is the key to unlocking a clear and consistent experience.

From the simplest of emails to the most complex websites, every piece of digital text is represented by a character encoding. These encodings act as a translator, converting human-readable characters into the binary code that computers understand. When these encodings are mismatched, the result is often a frustrating jumble of characters, a phenomenon we commonly refer to as mojibake or garbled text. The good news is that by gaining a better understanding of these systems, you can often diagnose and fix these issues, reclaiming the clarity of your digital communication.

One of the most prevalent character encodings today is Unicode, a standard that aims to represent every character from every language in the world. Think of it as the universal language of computers. Within the Unicode standard, each character is assigned a unique code point, a numerical value that identifies it. The way these code points are stored and transmitted is where encodings like UTF-8 and UTF-16 come into play. UTF-8, in particular, has become the dominant encoding for the web, prized for its ability to represent a wide range of characters efficiently. When we talk about UTF-8 (chars), we are referring to the specific characters that are encoded using the UTF-8 standard.

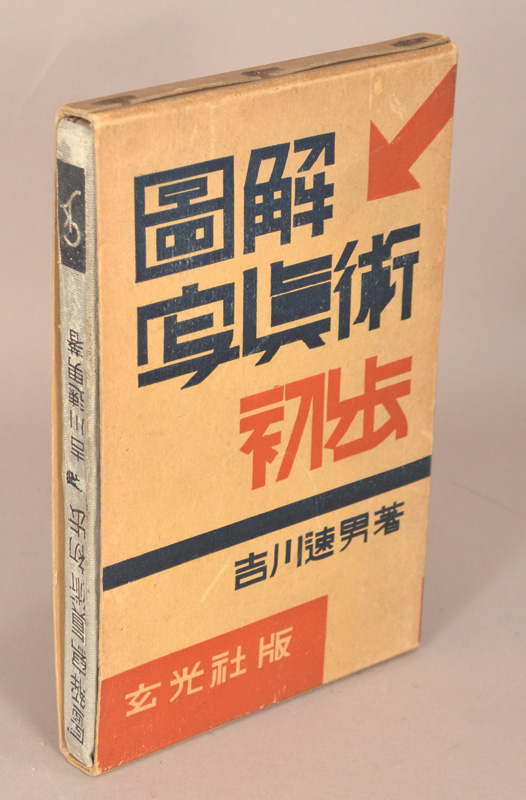

Let's delve into a specific example. Consider the following: U+FF00 ï¼ U+FF01 ! ï¼ FULLWIDTH EXCLAMATION MARK: U+FF02 " ï¼ FULLWIDTH QUOTATION MARK: U+FF03. Here, we see a glimpse of the Unicode character set, starting at code position FF00. Each line represents a character along with its UTF-8 representation (which is how it's actually stored). The FULLWIDTH characters, often used in East Asian languages, are designed to occupy the same width as a standard character in the text.

But what happens when things go wrong? Imagine you receive a text that looks like this: 具有éœé›»ç¢çŸè£ç½®ä¹‹å½±åƒè¼¸å…¥è£ç½®. This is a classic example of garbled text, the product of an encoding mismatch. The characters å, ç, æ, é, etc., don't make any sense on their own. They're the result of text encoded in one character set (often something like Western European (ISO-8859-1) or older encodings like GBK) being interpreted as UTF-8, or vice-versa. The computer is misinterpreting the underlying binary data.

This leads us to the practical challenges of dealing with these issues. Several tools and techniques can help us untangle these messes. One popular Python library, `ftfy` (fixes text for you), is specifically designed to clean up text that has been corrupted by encoding errors. It can automatically detect and correct common problems, making your text readable again. `ftfy.fix_text` and `ftfy.fix_file` are two of its most useful functions. If you’re working with a file containing garbled text, using `ftfy.fix_file` can often be a quick and effective solution. The library automatically analyzes and repairs the character encoding inconsistencies, hopefully restoring the text to its original clarity.

Let's consider another common scenario: the appearance of 你好Java as garbled text. This is a string in Chinese. If it appears as something unreadable, like “ä½ å¥½Java,” you're encountering an encoding problem. Different encodings handle Chinese characters differently. If the system is interpreting this text as ASCII (which is limited to the English alphabet and some symbols) or a Western encoding, the Chinese characters will appear as garbage.

To fully grasp the scope of encoding issues, it's helpful to understand a few of the key players. The character sets like ISO-8859-1 (also known as ISO Latin 1) are older encodings that were designed to support Western European languages. They're limited in their ability to handle characters from other languages. Then, there's GBK, a character encoding used for Simplified Chinese. It contains a large set of Chinese characters, but it is not compatible with many other character sets. Finally, Unicode and its various encoding forms (like UTF-8) are designed to handle the widest range of characters from all languages.

When developing web applications, character encoding issues are very common. Always declare the character encoding in your HTML documents using the `` tag: ``. This tells the web browser how to interpret the text content. Choosing UTF-8 is generally recommended, as it supports a wide range of characters and is the most compatible with modern web standards. Also, on the server-side, you must also ensure that your application, database and any other related systems are configured to use UTF-8 as the encoding.

Another important tool in the arsenal for handling text encoding is the Unicode table. Numerous online resources provide complete lists of Unicode characters, allowing you to look up the code point for a specific character or symbol. Websites like BRANAH.COM offer comprehensive tables, where you can find characters used in various languages, emojis, arrows, musical notes, and more. These tables are useful for identifying the correct Unicode values and understanding the range of characters supported.

In summary, understanding character encoding is vital for anyone who works with digital text. Whether you are writing code, creating websites, or simply reading emails, a grasp of these concepts will empower you to troubleshoot problems, fix errors, and ensure that your digital information is displayed correctly. By using tools like `ftfy` and embracing best practices like using UTF-8, you can overcome the common pitfalls of character encoding and reclaim the clarity of your digital world. Remember the core concept: character encoding is about translating between the symbols and the bits, and when that translation goes wrong, your information can become a mess.

Let's now consider a table that provides a detailed overview of key aspects of character encoding. This provides a concise reference for key terms, encoding schemes and important considerations:

| Aspect | Details |

|---|---|

| Definition | A system for representing characters (letters, numbers, symbols, etc.) as numerical codes that computers can understand and process. |

| Purpose | To enable the consistent storage, transmission, and display of text data across different systems and platforms. |

| Key Encodings |

|

| Unicode | A character set that assigns a unique code point (a numerical value) to every character from every language in the world. Unicode provides a universal standard for character representation. |

| Code Point | A unique numerical value assigned to a character in Unicode (e.g., U+0041 for the letter A). |

| Encoding vs. Character Set |

|

| Common Problems |

|

| Troubleshooting |

|

| Best Practices |

|

By utilizing these concepts and tools, you’ll be well-equipped to successfully navigate the world of character encodings and maintain the integrity of your digital text.